Settings

Open by clicking the Creator agent in the agent list.

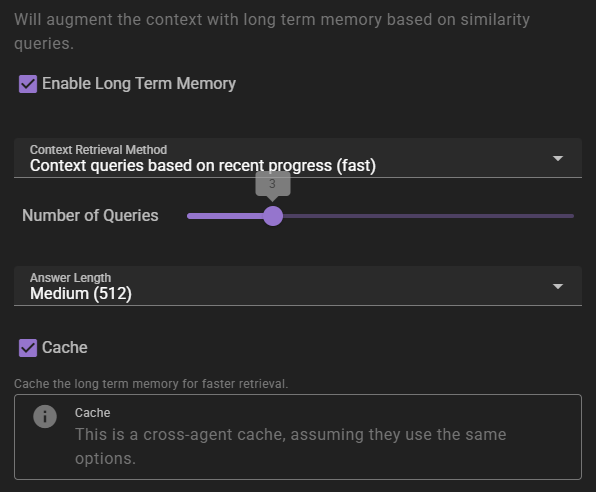

Long Term Memory

If enabled will inject relevant information into the context using relevancy through the Memory Agent.

Context Retrieval Method

What method to use for long term memory selection

Context queries based on recent context- will take the last 3 messages in the scene and select relevant context from them. This is the fastest method, but may not always be the most relevant.Context queries generated by AI- will generate a set of context queries based on the current scene and select relevant context from them. This is slower, but may be more relevant.AI compiled questions and answers- will use the AI to generate a set of questions and answers based on the current scene and select relevant context from them. This is the slowest, and not necessarily better than the other methods.

Number of queries

This settings means different things depending on the context retrieval method.

- For

Context queries based on recent contextthis is the number of messages to consider. - For

Context queries generated by AIthis is the number of queries to generate. - For

AI compiled questions and answersthis is the number of questions to generate.

Answer length

The maximum response length of the generated answers.

Cache

Enables the agent wide cache of the long term memory retrieval. That means any agents that share the same long term memory settings will share the same cache. This can be useful to reduce the number of queries to the memory agent.

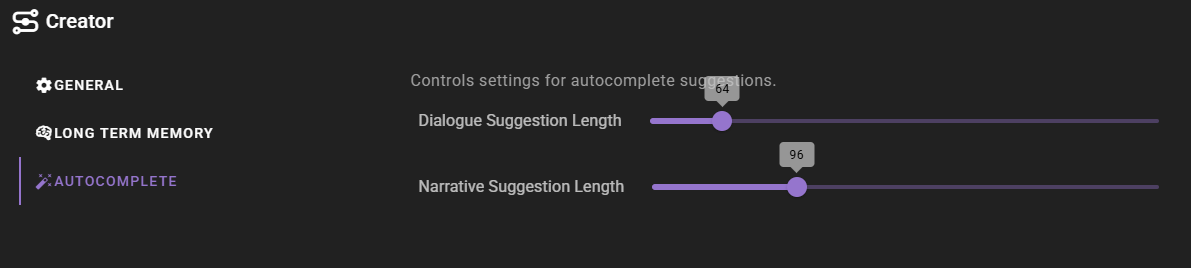

Autocomplete

Dialogue Suggestion Length

How many tokens to generate (max.) when autocompleting character actions.

Narrative Suggestion Length

How many tokens to generate (max.) when autocompleting narrative text.