The Google backend provides image generation, editing, and analysis capabilities using Google's Gemini image models. It supports text-to-image generation, image editing with reference images, and AI-powered image analysis.

Prerequisites

Before configuring the Google backend, you need to obtain a Google API key:

- Go to Google AI Studio

- Sign in with your Google account

- Create a new API key or use an existing one

- Copy the API key

Then configure it in Talemate:

- Open Talemate Settings → Application → Google

- Paste your Google API key in the "Google API Key" field

- Save your changes

API Key vs Vertex AI Credentials

The Visualizer agent uses the Google API key (not Vertex AI service account credentials). Make sure you're using the API key from Google AI Studio, not the service account JSON file used for Vertex AI.

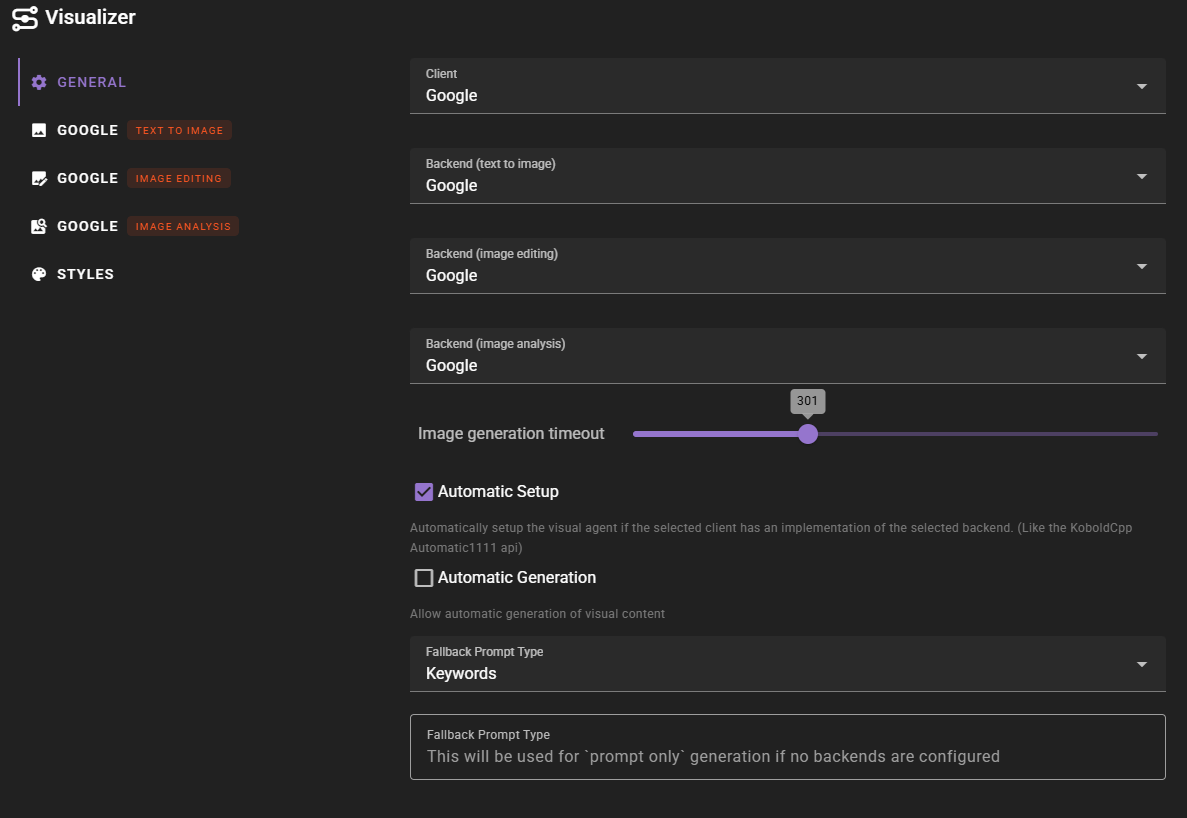

Configuration

In the Visualizer agent settings, select Google as your backend for text-to-image generation, image editing, image analysis, or any combination of these. Each operation can be configured separately.

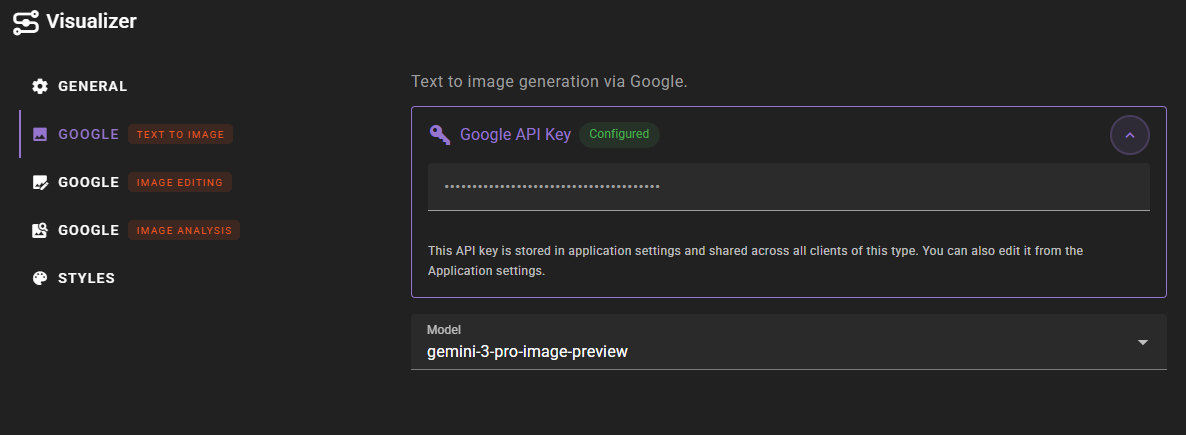

Text-to-Image Configuration

For text-to-image generation, configure the following settings:

- Google API Key: Your Google API key (configured globally in Talemate Settings)

- Model: Select the image generation model to use:

- gemini-2.5-flash-image: Faster generation, good quality

- gemini-3-pro-image-preview: Higher quality, slower generation

The Google backend automatically handles aspect ratios based on the format you select:

- Landscape: 16:9 aspect ratio

- Portrait: 9:16 aspect ratio

- Square: 1:1 aspect ratio

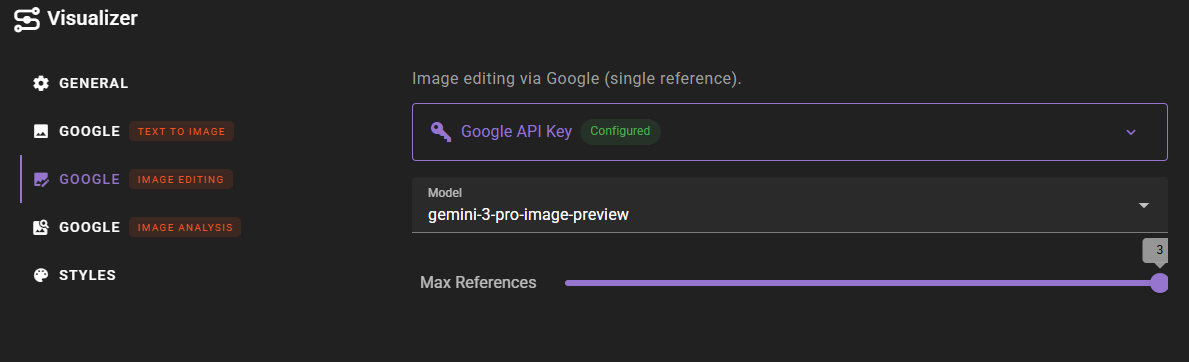

Image Editing Configuration

For image editing, configure similar settings but with an additional option:

- Google API Key: Your Google API key

- Model: Select the image generation model (same options as text-to-image)

- Max References: Configure the maximum number of reference images (1-3). This determines how many reference images you can provide when editing an image.

Reference Images

Google's image editing models can use up to 3 reference images to guide the editing process. The "Max References" setting controls how many reference images Talemate will send to the API. You can adjust this based on your needs, but keep in mind that more references may provide better context for complex edits.

Image Analysis Configuration

For image analysis, configure the following:

- Google API Key: Your Google API key

- Model: Select a vision-capable text model:

- gemini-2.5-flash: Fast analysis, good for general use

- gemini-2.5-pro: Higher quality analysis

- gemini-3-pro-preview: Latest model with improved capabilities

Analysis Models

Image analysis uses text models that support vision capabilities, not the image generation models. These models can analyze images and provide detailed descriptions, answer questions about image content, and extract information from visual content.

Usage

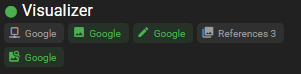

Once configured, the Google backend will appear in the Visualizer agent status with green indicators showing which capabilities are available.

The status indicators show:

- Text to Image: Available when text-to-image backend is configured

- Image Edit: Available when image editing backend is configured (shows max references if configured)

- Image Analysis: Available when image analysis backend is configured

Model Recommendations

Text-to-Image and Image Editing

- gemini-2.5-flash-image: Best for faster generation and general use. Good balance of speed and quality.

- gemini-3-pro-image-preview: Best for higher quality results when speed is less important. Use when you need the best possible image quality.

Image Analysis

- gemini-2.5-flash: Best for quick analysis and general use cases. Fast responses with good accuracy.

- gemini-2.5-pro: Best for detailed analysis requiring higher accuracy and more nuanced understanding.

- gemini-3-pro-preview: Best for the latest capabilities and most advanced analysis features.

Prompt Formatting

The Google backend uses Descriptive prompt formatting by default. This means prompts are formatted as natural language descriptions rather than keyword lists. This works well with Google's Gemini models, which are designed to understand natural language instructions.

When generating images, provide detailed descriptions of what you want to create. For image editing, describe the changes you want to make in natural language.