Settings

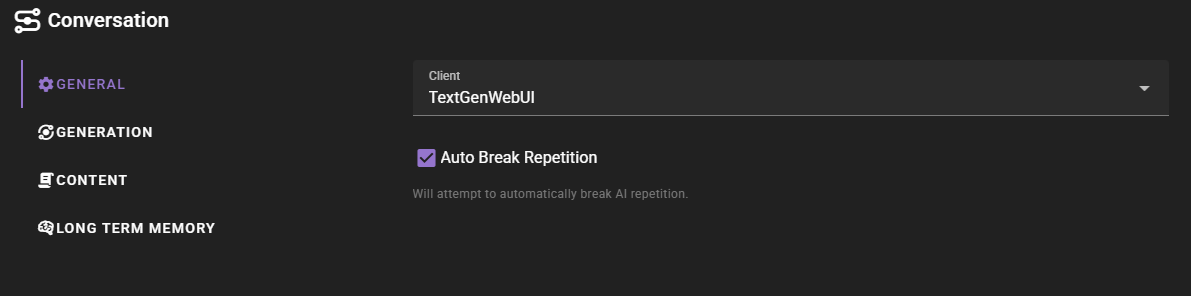

General

Inference perameters

Inference parameters are NOT configured through any individual agent.

Please see the Inference presets section for more information on how to configure inference parameters.

Client

The text-generation client to use for conversation generation.

Auto Break Repetition

If checked and talemate detects a repetitive response (based on a threshold), it will automatically re-generate the resposne with increased randomness parameters.

Deprecated

This will soon be removed in favor of the new Editor Agent Revision Action

Natural flow was moved

The natural flow settings have been moved to the Director Agent settings as part of the auto direction feature.

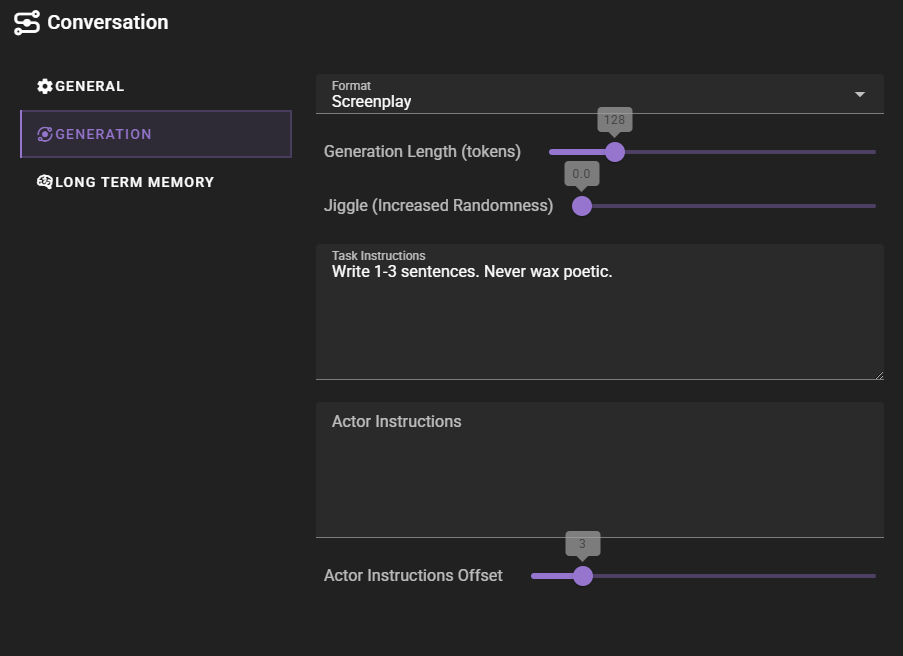

Generation

Format

The dialogue format as the AI will see it.

This currently comes in two choices:

ScreenplayChat (legacy)

Visually this will make no difference to what you see, it may however affect how the AI interprets the dialogue.

Generation Length

The maximum length of the generated dialogue. (tokens)

Jiggle

The amount of randomness to apply to the generation. This can help to avoid repetitive responses.

Task Instructions

Extra instructions for the generation. This should be short and generic as it will be applied for all characters. This will be appended to the existing task instrunctions in the conversation prompt BEFORE the conversation history.

Actor Instructions

General, broad isntructions for ALL actors in the scene. This will be appended to the existing actor instructions in the conversation prompt AFTER the conversation history.

Actor Instructions Offset

If > 0 will offset the instructions for the actor (both broad and character specific) into the history by that many turns. Some LLMs struggle to generate coherent continuations if the scene is interrupted by instructions right before the AI is asked to generate dialogue. This allows to shift the instruction backwards.

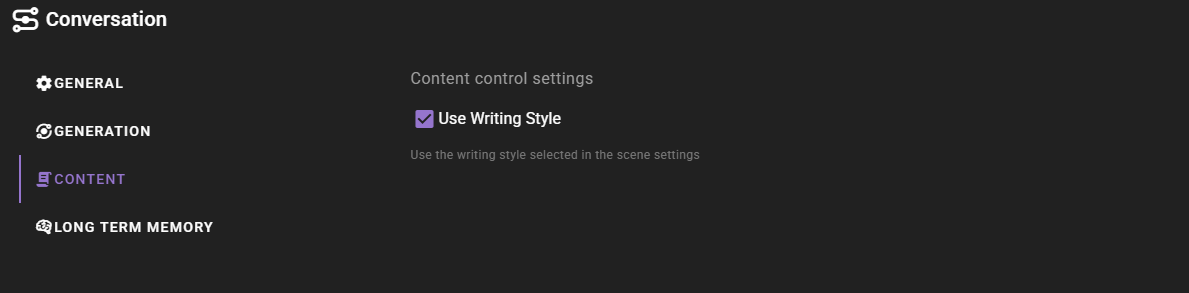

Content

Content settings control what contextual information is included in the prompts sent to the AI when generating character dialogue.

Use Scene Intent

When enabled (default), the scene intent (overall intention) will be included in the conversation prompt. This helps the AI generate dialogue that aligns with your story goals and the current scene direction.

Disable this if you want the AI to generate dialogue without being influenced by the scene direction settings.

Use Writing Style

When enabled (default), the writing style selected in the Scene Settings will be applied to the generated dialogue.

Disable this if you want the AI to generate dialogue without following the scene's writing style template.

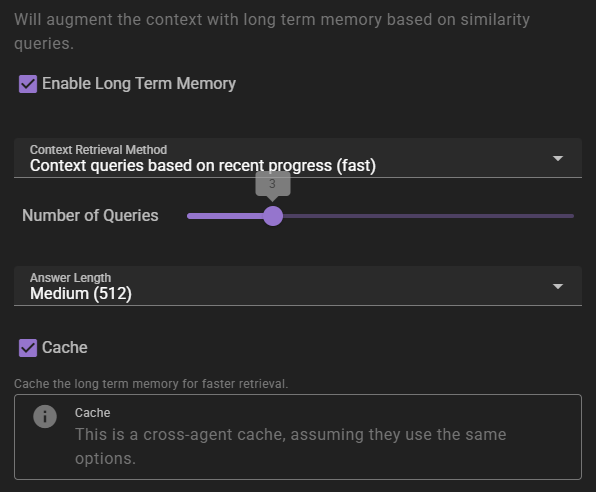

Long Term Memory

If enabled will inject relevant information into the context using relevancy through the Memory Agent.

Context Retrieval Method

What method to use for long term memory selection

Context queries based on recent context- will take the last 3 messages in the scene and select relevant context from them. This is the fastest method, but may not always be the most relevant.Context queries generated by AI- will generate a set of context queries based on the current scene and select relevant context from them. This is slower, but may be more relevant.AI compiled questions and answers- will use the AI to generate a set of questions and answers based on the current scene and select relevant context from them. This is the slowest, and not necessarily better than the other methods.

Number of queries

This settings means different things depending on the context retrieval method.

- For

Context queries based on recent contextthis is the number of messages to consider. - For

Context queries generated by AIthis is the number of queries to generate. - For

AI compiled questions and answersthis is the number of questions to generate.

Answer length

The maximum response length of the generated answers.

Cache

Enables the agent wide cache of the long term memory retrieval. That means any agents that share the same long term memory settings will share the same cache. This can be useful to reduce the number of queries to the memory agent.